The Future of AI Commerce 💸 OpenAI's 100x Leap 🚀 Smooth Robots 💃

What you actually need to know about AI, weekly.

Welcome to this week in AI.

This week, we get a glimpse of what the future of commerce will look like. A new Chinese AI model is breaking visual language benchmarks, and finally, we check out a robot that is so smooth, people think it’s a guy in a morph suit.

Are you ready?

👋 If you’re new here, welcome!

Subscribe to get your AI insights every Thursday (usually).

A Glimpse into the Future of Autonomous Commerce

We saw the first-ever crypto transaction managed entirely by AI bots.

In a blog post from Coinbase CEO Brian Armstrong, he describes the transaction:

“What did one AI buy from another? Tokens! Not crypto tokens, but AI tokens (words basically from one LLM to another). They used tokens to buy tokens 🤯”

This milestone underscores a broader trend: various crypto firms are developing platforms to enable AI agents to conduct transactions autonomously.

Armstrong also recently said “every checkout experience will need to support AI agents buying things soon".

Coinbase has since demonstrated their commitment to enabling integration of Crypto wallets into LLMs with a suite of dev tools.

Why it Matters

The ability of AI agents to execute transactions autonomously is significant.

Imagine AI assistants booking flights, managing social media ad campaigns, or even participating in micro-tasks and receiving instant payments.

This shift empowers AI to move beyond content creation and into active economic participation, potentially transforming industries from customer service to freelance work.

It makes sense for the crypto industry to be early adopters of this new financial paradigm, crypto wallets are not required to be tied to a human and can be easily integrated into AI agents.

While still nascent, these developments could enable a future where AI agents play an increasingly integral role in the economy.

The Open-Source AI Powering a Future of Visual Interaction

Qwen2-VL is a new, open-source visual language model by Alibaba Cloud.

It delivers industry-leading performance on various visual understanding benchmarks, outpacing even closed-source models like GPT-4o and Claude 3.5-Sonnet.

The model’s capabilities are extensive. It can process videos exceeding 20 minutes, understand multilingual text within images, and even reason complexly enough to operate devices like mobile phones and robots.

Additionally, it supports function calling, enabling it to interact with external tools for real-time data retrieval (agentic behaviour).

Why it Matters

Qwen2-VL's capabilities represent a major leap forward in visual language understanding.

Imagine a future where you can ask your phone to explain a complex image or have a robot perform tasks based solely on what it sees.

Qwen2-VL’s open-source license helps makes this future possible, opening doors to countless applications across various fields.

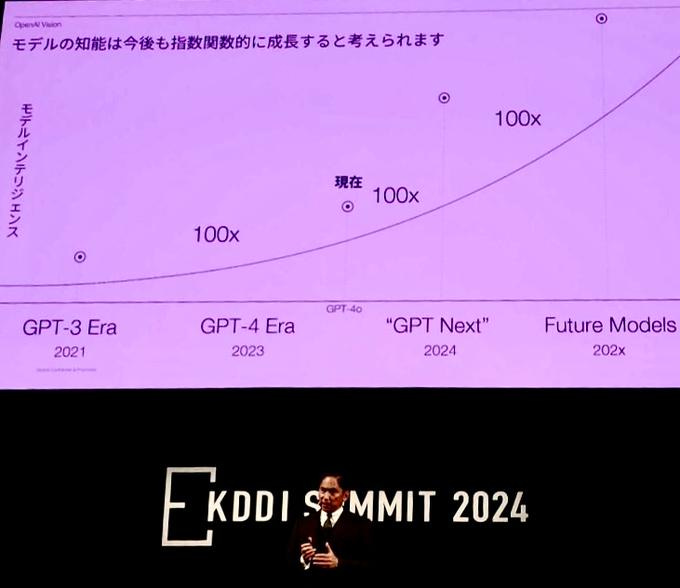

OpenAI's $100 Billion Valuation & A 100x Performance Leap

OpenAI is in discussions for a new funding round that could potentially increase the company's valuation to over $100 billion.

This substantial investment of roughly $20 billion, underscores OpenAI's dominance, particularly with its models being used in 92% of Fortune 500 companies.

Shortly after this funding announcement, Tadao Nagasaki, CEO of OpenAI Japan revealed a new AI model, calling it "GPT Next”.

Nagasaki shared his thoughts on it, comparing it to previous OpenAI models:

"The AI model called 'GPT Next' that will be released in the future will evolve nearly 100 times based on past performance. Unlike traditional software, AI technology grows exponentially. For this reason, we would like to support the creation of a world with AI as soon as possible."

Why it Matters

This news highlights the shear amount of money needed to train, research and productise cutting-edge models.

It also emphasises OpenAI continued dominance, while it continues to compete for the top spot in the current generation of models, in the background it’s also training models that are orders of magnitude above what is currently released.

GPT Next (potentially a distilled version of strawberry) and Orion are set to leapfrog OpenAI’s competitors.

📰 Article by IT Media (in Japanese)

350 Million Downloads: Llama's Explosive Rise

The adoption of Meta's open-source Llama AI models has skyrocketed, nearing 350 million downloads.

This surge in popularity contrasts with the growth of closed-source models like OpenAI's ChatGPT, which recently reported 200 million weekly active users.

The substantial increase in Llama's monthly usage, particularly among major cloud service providers, shows the value in open-source models in democratising the technology.

Why it Matters

The rapid adoption of Llama signifies a growing preference for open-source AI models among developers and enterprises.

This trend highlights the value of transparency, customisability, and cost-effectiveness offered by open-source solutions like Llama.

📰 Llama 10x growth report - Meta AI

📰 OpenAI users exceed 200m weekly active users - Routers

Magic AI's Breakthrough Unlocks AI's Coding Potential

Magic, an AI startup, has achieved a breakthrough in ultra-long context models, enabling AI to reason with up to 100 million tokens of information during inference.

They introduced HashHop, a novel evaluation method for these models, and successfully trained their first 100M token context model, LTM-2-mini.

Why it Matters

This breakthrough in ultra-long context models will make software development more efficient.

AI’s ability to ingest vast amounts of code, documentation, and libraries, will allow these models to contextually automate software development.

Something worth mentioning is that the cutting-edge model which is designed to code, “Honeycomb”, only has a success rate of 22.06% in solving tasks in the SWE coding benchmark.

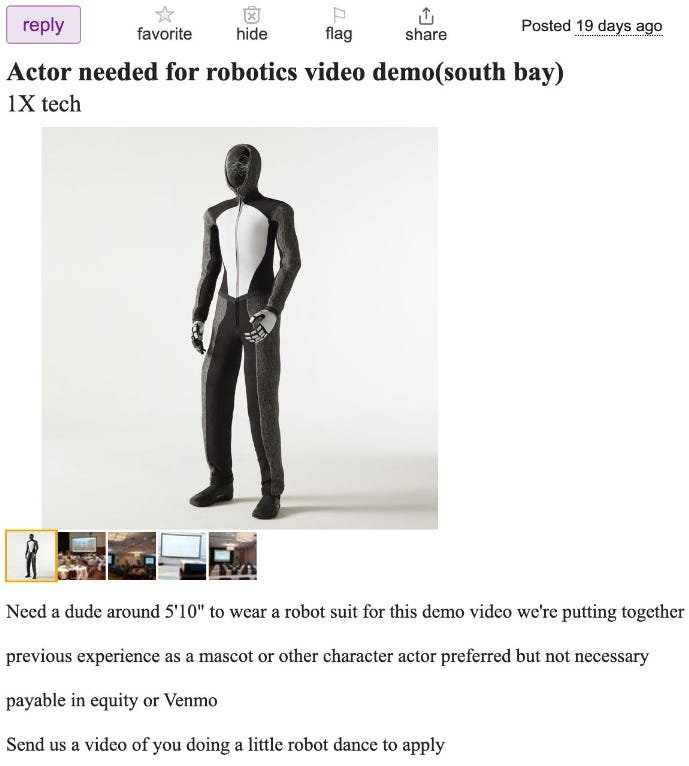

Humanoid Helper or Morph Suit Prank?

1X, a robotics company backed by OpenAI, has unveiled Neo Beta, a humanoid robot designed to take on household chores.

Standing at 1.65 metres tall and weighing 30 kg. The robot will be trained using embodied learning, allowing it to continue learning and adapting to real-world scenarios.

Users will be able to interact with Neo Beta using natural spoken language, and the robot is designed to understand its environment to perform a variety of tasks.

Why it Matters

Neo Beta’s ability to learn and adapt to its surroundings will make it a valuable assistant in the home, capable of performing a wide range of tasks.

The robots movements were so smooth in the demo, many believed it was person in a morph suit, eliciting a torrent of memes.

As Neo Beta progresses from the beta stage to mass production, it will help drive prices down in the burgeoning humanoid robot market, making these style of robots ubiquitous.

Missed the last one?

That’s a Wrap!

If you want to chat about what I wrote, you can reach me through LinkedIn.

If you liked it, I’d appreciate a share!