⚔️ OpenAI's Exec Bloodbath, 👓 Meta's Orion AR Glasses, 🔍 Ancient Secrets in Peru & More!

Plus, OpenAI's DevDay, AI-Driven Chip Design & Much More

Welcome to this week in AI.

This week's AI news: OpenAI speeds up and lowers the cost of AI development, archaeologists use AI to uncover ancient secrets, and Meta reveals lightweight AR glasses for everyday use. Plus, learn how AI is revolutionising chip design.

Let’s get into it.

Don’t feel like reading? I’ve turned this newsletter into a podcast (15:29).

Don't Miss Out: UX for AI Meetup & Guide

Join me at the QLD AI Meetup on October 30th at The Precinct in Fortitude Valley, Brisbane, where I'll be discussing "Why UX Falls Short in The Age of AI: Redefining Interaction Models."

We'll explore the current state of AI interaction design, emerging strategies, and the future of UX. RSVP at the Meetup page.

Also, stay tuned for the release of my "Ultimate Guide For Designing AI Systems," a comprehensive resource for creating intuitive AI-driven experiences.

Meta's Orion: Pioneering the Future of Augmented Reality Glasses

Meta's Orion represents a significant advancement in AR technology.

These prototype glasses, weighing under 100 grams, are designed for comfortable everyday wear while offering a groundbreaking 70-degree field of view.

This wide field of view, achieved through innovative silicon carbide lenses, intricate waveguides, and uLED projectors, allows for immersive experiences with holographic displays that seamlessly blend with the real world.

Beyond the display, Orion features an advanced input system combining voice control, eye tracking, and hand tracking with an EMG wristband.

This allows users to interact with the glasses naturally and intuitively, without the need for bulky controllers or hand gestures that disrupt social interaction.

Why it Matters

Orion's technological advancements offer a glimpse into the future of personal computing:

Expanded Field of View: The 70-degree field of view is significantly larger than what's currently available in AR glasses, enabling more immersive and engaging experiences. This allows for larger virtual displays, more interactive elements, and a greater sense of presence within the augmented environment.

Novel Display Technology: The use of silicon carbide lenses, intricate waveguides, and uLED projectors is pushing the boundaries of display technology, resulting in a compact, lightweight design that delivers high-quality visuals.

Intuitive Interaction: The EMG wristband, combined with voice and eye tracking, offers a seamless and intuitive way to interact with the AR environment. This technology allows for precise control without requiring users to make obvious hand gestures, promoting a more natural and socially acceptable user experience.

Towards Everyday Wearability: Orion's lightweight design and comfortable form factor are crucial steps towards creating AR glasses that can be worn throughout the day. This focus on wearability is essential for AR to become a truly ubiquitous technology, seamlessly integrated into our daily lives.

OpenAI’s DevDay: Faster, Smarter and Cheaper

OpenAI hosted its DevDay 2024 event, announcing four major additions to its developer toolkit.

These new features aim to improve the capabilities and cost-effectiveness of applications built using OpenAI models.

Talk to AI: The Realtime API lets you create apps where you can have a voice conversation with an AI, just like talking to a person.

Better Image Understanding: Vision fine-tuning allows developers to quickly improve GPT-4o's ability to understand images, with improvements as high as 20% in accuracy for certain tasks.

Save Money: Prompt Caching reduces costs by up to 50% by remembering and reusing previous inputs.

Smaller, Efficient Models: Model Distillation helps developers create smaller, more efficient AI models that can run on devices with less power, like smartphones.

Why It Matters

These new features provide developers with more powerful and efficient tools for building AI applications.

The Realtime API opens up possibilities for creating engaging voice-based experiences, while vision fine-tuning enhances the visual understanding capabilities of GPT-4o.

Prompt Caching and Model Distillation contribute to cost optimisation and improved performance, making OpenAI's models more accessible and practical for a wider range of applications.

AI Uncovers Ancient Secrets in Peru

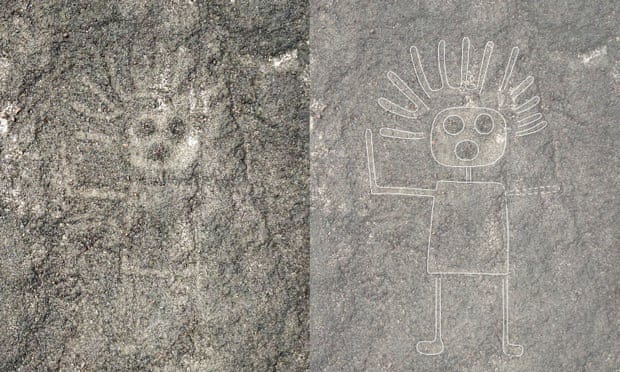

Archaeologists have uncovered 303 previously unknown geoglyphs near the famous Nazca Lines in Peru, nearly doubling the number of known figures at the site.

This remarkable discovery was made possible through a collaboration between Yamagata University and IBM Research, who utilised AI and low-flying drones to analyse the Nazca plateau.

These newly found geoglyphs, which are smaller than the famous Nazca Lines and date back to 200 BC, depict a variety of figures, including animals like parrots and monkeys, humans, and even decapitated heads.

This approach has accelerated the pace of archaeological discovery. As Johny Isla, Peru’s chief archaeologist for the Nazca Lines, noted:

"What used to take three or four years, can now be done in two or three days."

The use of AI allowed researchers to analyse vast quantities of geospatial data and identify areas where more geoglyphs might be found, dramatically improving the efficiency of the research.

Why it Matters

These findings provide valuable insights into the transition from the Paracas culture to the Nazca culture, offering a glimpse into the daily life and rituals of these ancient peoples.

The smaller geoglyphs, unlike the larger Nazca Lines, are believed to have served as signs or representations of family groups, showcasing a different aspect of their culture.

This discovery highlights the potential of AI to not only accelerate the pace of archaeological research but also to deepen our understanding of history and ancient civilisations.

📰 Article about the discoveries by The Guardian

AlphaChip: AI That Designs Chips

Google DeepMind's AlphaChip is an AI system that's changing how computer chips are designed.

Using a reinforcement learning approach similar to AlphaGo, AlphaChip treats chip design as a complex game, learning and improving with each placement of a circuit component.

This approach has led to significant improvements in chip design efficiency and performance.

For example, in Google's latest TPU (Tensor Processing Unit) design, AlphaChip achieved a 6.2% reduction in wire length compared to human experts, contributing to a nearly five-fold increase in peak performance and a 67% improvement in energy efficiency.

AlphaChip's impact extends beyond Google. Major industry players like MediaTek have adopted AlphaChip to accelerate the development of their own advanced chips, including those used in Samsung smartphones.

This widespread adoption is indicative of a broader shift towards AI-assisted design in the semiconductor industry.

To further encourage this trend, Google DeepMind has open-sourced AlphaChip, providing researchers and developers with the tools to explore and build upon this technology.

Why it Matters

AlphaChip is a major advancement in chip design technology, not only because it automates and optimises this complex process but also because it creates a powerful feedback loop.

By designing better chips, AlphaChip enables the development of more powerful AI models. These more powerful AI models, in turn, can be used to design even better chips, creating a cycle of continuous improvement.

This feedback loop has the potential to accelerate progress in both chip design and artificial intelligence, leading to a future where chips are faster, cheaper, and more energy-efficient than ever before.

OpenAI’s Executive Battle Royal: CEO Last Man Standing

OpenAI is experiencing a dramatic shift in its leadership structure with the departure of several key executives, including Chief Technology Officer Mira Murati.

Murati, who played a crucial role in OpenAI's development and public image, cited a need for personal exploration as her reason for leaving.

Her exit is part of a larger trend that has seen all of OpenAI's original co-founders either leave the company or take extended leave.

These departures come as OpenAI undergoes a controversial restructuring into a for-profit entity, a move that has reportedly led Apple to withdraw from a planned funding round.

Why it Matters

The recent wave of executive departures at OpenAI, including key figures like CTO Mira Murati and several co-founders, raises concerns about the company's ability to maintain its innovative edge and commitment to responsible AI development.

This leadership vacuum could disrupt ongoing projects and intensify competition in the AI field, while also raising questions about OpenAI's future direction amidst its controversial restructuring into a for-profit entity.

While OpenAI has secured significant funding and continues to attract investor interest, the long-term impact of these leadership changes remains to be seen.

📰 Article on the restructuring into a for-profit entity by Reuters

📰 Article on the executives exodus by the Observer

Microsoft Copilot: Your New AI Companion

Microsoft is rolling out a major update to its Copilot AI assistant.

Copilot now has voice and vision capabilities, allowing for natural language interaction and the ability to understand and respond to web content in Microsoft Edge.

The "Think Deeper" feature enhances Copilot's reasoning abilities, enabling it to tackle complex questions with step-by-step answers.

Why it Matters

This is more than just a software update; it's a step towards catching up to rivals OpenAI and Google.

These new features translate to tangible benefits for you:

Talk to your computer: With Copilot Voice, use natural speech to interact with your PC, ask questions, and give commands. This makes using your computer more intuitive and accessible.

Let Copilot see what you see: Copilot Vision allows your AI assistant to understand and interact with the webpages you're viewing. Need help finding furniture for your apartment? Copilot Vision can analyse the page you're on and offer suggestions in real-time.

Get more in-depth answers: Think Deeper lets Copilot reason through complex questions, providing detailed and step-by-step answers. This is perfect for comparing options or tackling challenging problems.

Liquid AI Challenges Transformers with New Foundation Models

Liquid AI has introduced Liquid Foundation Models (LFMs), a new type of AI model that outperforms traditional transformers.

LFMs are smaller, faster, and more memory-efficient, making them ideal for many applications.

They can handle long inputs and excel in various benchmarks, demonstrating their superior performance.

Why it Matters

LFMs offer a compelling alternative to transformer-based models.

Their superior performance, memory efficiency, and long context length open up new possibilities for AI applications, especially in resource-constrained environments.

Developers can explore these models on Liquid Playground, Lambda, Perplexity Labs, and Cerebras Inference, with optimisation underway for various hardware platforms including NVIDIA, AMD, Qualcomm, Cerebras, and Apple.