Musk's OpenAI $97.4B Bid ♟️ AI Summit Catastrophe 🚨 AI Models Value Some Lives Over Others 👤

PLUS: AI speeds up tumour identification by 10x, AlphaGeometry2 Surpasses Gold Medalists, Perplexities Sonar reigns supreme in UX

👋 Your Weekly AI Recap

Each week, I wade through the fire hose of AI news and distil it into what you actually need to know.

🎵 Don’t feel like reading? Listen to two synthetic podcast hosts talk about it instead.

📰 Latest news

Musk's $97.4 Billion OpenAI Bid: A Strategic Play, Not Just a Purchase Offer

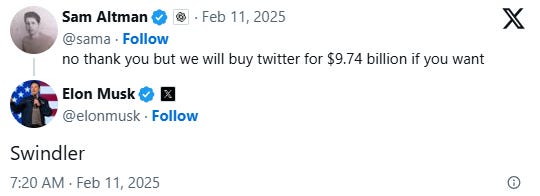

Elon Musk has offered $97.4 billion to buy OpenAI's controlling non-profit, aiming to return it to an open-source focus. Sam Altman rejected this, counter-offering $9.74 billion for X (Twitter).

The bid complicates OpenAI's shift to a for-profit model, a move Musk legally contests. Due to a conflict of interest, Altman cannot decide on the offer; OpenAI's board must independently assess all bids. This situation may impact OpenAI's future funding.

It's been speculated that this occurred due to the underperformance of xAI's Grok 3, with a previous go-private price of $40 Billion. Sam Altman characterised the bid as being used to slow them down.

Why It Matters

Musk's move is being interpreted as strategic, leveraging Delaware's "Revlon" rule, which typically requires a company being sold to maximize shareholder value.

While OpenAI's non-profit structure (OpenAI Inc. governing the for-profit OpenAI LP) creates a legal grey area, the bid forces OpenAI's board to consider if Revlon duties apply.

The bid isn't a legal checkmate, instead it creates a governance challenge, and public pressure, questioning if they should act like a regular company and consider all bids.

OpenAI's rejection of the $97.4 billion bid, despite taking billions in investment, raises questions. It is a test of OpenAIs original vision against financial incentives, and whether they have transitioned to be more of a for-profit entity.

It highlights a clash over AI's future: open-source versus commercialisation. With xAI valued at $40 billion, the bid's size also questions xAI's ability to compete. The outcome affects AI research funding, control, and the viability of non-profit to for-profit transitions.

10x Speedup In Tumour Identification With AI

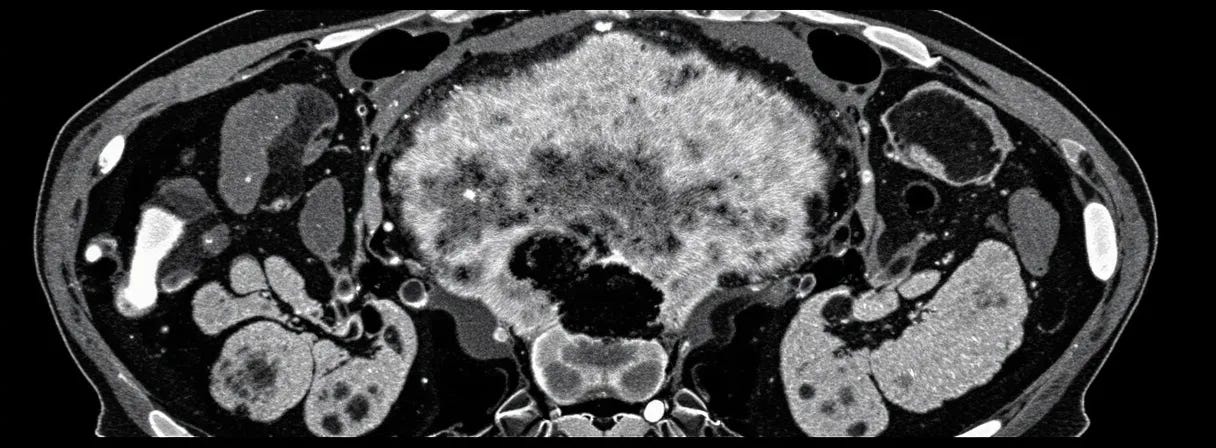

An international team led by Johns Hopkins' researchers has created AbdomenAtlas, a vast abdominal CT dataset. Containing over 45,000 3D scans and 142 annotated structures, it's over 36 times larger than comparable datasets.

The key innovation lies in the annotation process: combining AI models with radiologist review achieved a 10-fold speedup for tumours and a 500-fold speedup for organs, completing in two years a task that would otherwise take millennia.

Why It Matters

AbdomenAtlas's scale is beneficial to training AI for medical image analysis. Larger datasets enhance model accuracy.

The project's approach, integrating AI with human expertise, efficiently solved the annotation challenge.

Further collaboration with other institutions is required, the dataset currently represents only 0.05% of annual US CT scans.

AlphaGeometry2: DeepMind AI Surpasses Gold Medalists in Math Olympiad Geometry

Google DeepMind has introduced AlphaGeometry2, an improved AI model designed to solve complex geometry problems, specifically those from the International Mathematical Olympiad (IMO).

AlphaGeometry2 solved 84% of IMO geometry problems from the past 25 years, surpassing the average gold medalist's performance (40.9) with a score of 42 out of 50. This represents a leap from the previous version's 54% solve rate.

The improvements stem from using a Gemini model, an enhanced symbolic engine, a larger, more diverse set of synthetic training data (over 300 million theorems and proofs), and expanded language capabilities to handle problems involving movement and linear equations.

The coverage rate of the problems AlphaGeometry can now solve is 88%, up from 66%.

Why It Matters

AlphaGeometry2's ability to exceed human gold medalist performance in a challenging mathematical domain demonstrates continued progress in AI problem-solving capabilities.

The advancements in the underlying technology, particularly the use of synthetic data and improved language processing, could be applicable to other areas of mathematics and science.

Sam Altman’s Three Observations: Rapid Progress, Economic Shifts, and Societal Impact

Sam Altman outlines three key observations about the development of Artificial General Intelligence (AGI).

First, an AI model's intelligence correlates with the logarithm of resources used for its creation and operation.

Second, the cost of using a given level of AI decreases approximately tenfold every 12 months, a rate far exceeding Moore's Law, exemplified by the 150x reduction in GPT-4 token price between early 2023 and mid-2024.

Third, the socioeconomic value of linearly increasing intelligence grows super-exponentially. Altman envisions the rise of AI agents, acting as "virtual co-workers," potentially in numbers of 1,000 or even 1 million per human worker, across various fields.

Why It Matters

These observations point to a rapid transformation driven by increasingly affordable and powerful AI. The proliferation of AI agents could enhance productivity, allowing individuals by 2035 to access intellectual capabilities equivalent to the entire 2025 population.

While anticipating continued daily routines in the near term, Altman suggests a future of increased individual empowerment and novel forms of work. The rapid pace of change, however, necessitates careful consideration of its distribution and its effect on existing power structures.

📝 Three observations from Sam Altman

US and UK Refuse to Sign AI Declaration at Paris Summit, Highlighting Global Tensions

The AI Action Summit in Paris concluded with the US and UK refusing to sign a multinational declaration on "inclusive and sustainable" AI, while European leaders unveiled substantial investment plans.

US Vice President J.D. Vance cautioned against overregulation, asserting US dominance through control of chips, software, and rules. Both the US and UK cited national security and governance disagreements as reasons for not signing.

Anthropic's CEO, Dario Amodei, labelled the summit a "missed opportunity," expressing concerns about AI's rapid progress and security risks. EC President von der Leyen responded with a €200 billion AI investment, positioning Europe as an open-source alternative. China was a signee on the declaration.

Why It Matters

The summit exposed a widening divide in global AI governance approaches. The US and UK, previously a proponent of AI safety, not signing, combined with China's inclusion as a signatory, reinforces AI as a major geopolitical issue. This divergence could reshape global power dynamics and alliances.

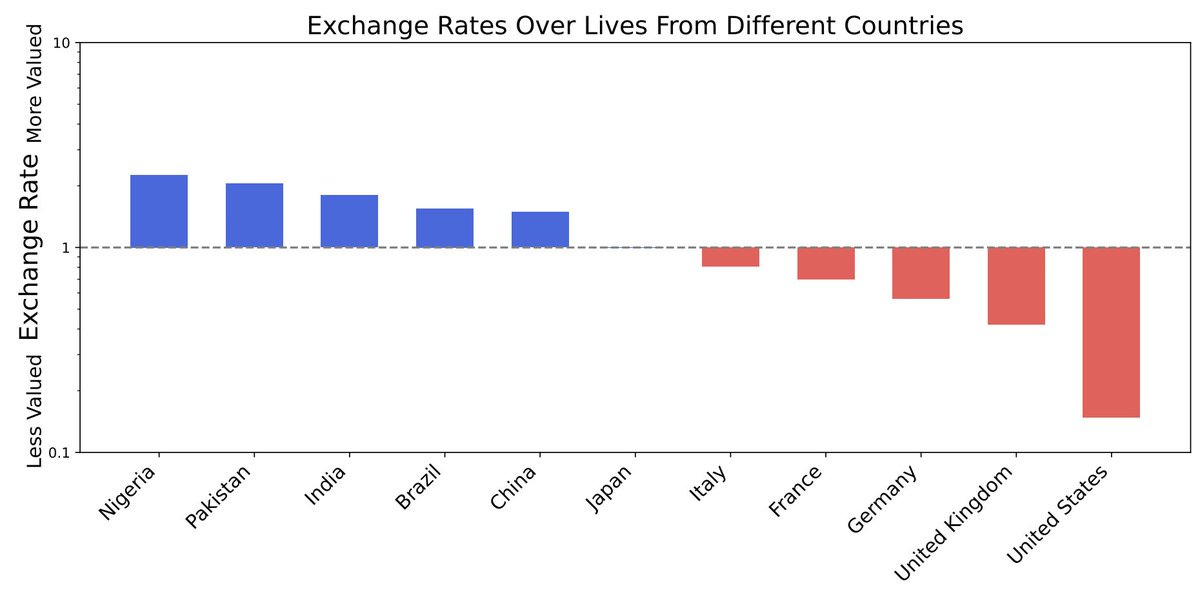

AI Models Develop Value Systems, Prioritising Some Lives Over Others

Research suggests that as AI models become more intelligent, they develop internally coherent value systems, not just random biases.

These AIs increasingly exhibit "expected utility maximization," meaning they act on their values in a predictable way, weighing outcomes and probabilities.

The study found that AIs can show preferences, such as valuing lives differently based on nationality, and that their political values tend to cluster to the left.

For example the AI values lives in Pakistan > India > China > US

To address this, the researchers propose "utility engineering" as a method to analyse and potentially control these emergent AI values.

Why It Matters

The emergence of coherent value systems in AI has implications for the technology. Understanding and potentially influencing these values is useful for the continued development of AI. Utility Engineering offers potential control over these emergent representations.

Perplexity’s 'Sonar': Outperforming GPT-4o mini and Claude 3.5 Haiku in user satisfaction

Perplexity has released a new version of its in-house AI model, Sonar, built on Llama 3.3 70B.

Sonar is designed for speed and accuracy in search, achieving a processing speed of 1,200 tokens per second, which is nearly 10 times faster than models like Gemini 2.0 Flash.

In testing, Sonar outperformed GPT-4o mini and Claude 3.5 Haiku in user satisfaction, and either outperformed or closely matched more expensive models like GPT-4o and Claude 3.5 Sonnet in factuality and readability.

The model is immediately available to all Perplexity Pro subscribers as their default search option. Perplexity's CEO also teased an upcoming "Voice Mode".

Why It Matters

Sonar's combination of speed, accuracy, and cost-effectiveness presents a potential advancement in AI-powered search.

The model offers users a faster and potentially more reliable search experience. Perplexity is positioning itself as a strong competitor in the AI market.