DeepSeek Deepdive 🤿 Curing Cancer 💊 OpenAI's Operator 🤖

PLUS: Meta's $65 billion dollar investment, AI-designed snake bit cure and OpenAI's o1 thinks in Chinese

👋 Welcome to this week in AI.

Each week, I wade through the fire hose of AI noise and distil it into what you actually need to know.

🎵 Don’t feel like reading? Listen to two synthetic podcast hosts talk about it instead.

📰 Latest news

DeepSeek: The AI Earthquake Shaking the Foundations of Silicon Valley

The world of Artificial Intelligence is in constant flux, but recently, a tremor from the East has sent shockwaves through the established order.

DeepSeek, a Chinese AI lab, has emerged not as a mere imitator, but as a disruptive force, challenging the dominance of giants like OpenAI and Google.

Its rapid ascent, marked by a groundbreaking new model and a chart-topping mobile app, has ignited both excitement and anxiety within the tech industry.

A New Kind of AI

The initial reaction to DeepSeek's emergence often fell into the trap of assuming it was simply a Chinese copycat. However, as Perplexity CEO Aravind Srinivas points out, this is a profound misunderstanding:

"There’s a lot of misconception that China ‘just cloned’ the outputs of openai. This is far from true and reflects incomplete understanding of how these models are trained in the first place."

DeepSeek's innovation lies in its approach to training, detailed in their paper "DeepSeek R1 Zero." Srinivas highlights their key breakthrough:

"DeepSeek R1 has figured out reinforcement learning (RL) finetuning. They wrote a whole paper on this topic called DeepSeek R1 Zero, where no supervised learning (SFT) was used. And then combined it with some SFT to add domain knowledge with good rejection sampling (aka filtering). The main reason it’s so good is it learned reasoning from scratch rather than imitating other humans or models."

This signifies a departure from simply mimicking human or model outputs, suggesting a deeper understanding of AI reasoning.

Technical analysis

Brian Roemmele's technical analysis further illuminates DeepSeek's noval approach, emphasising cost efficiency and architectural ingenuity.

"The cyclone pace of AI advancement has stunned the world. This last week it has gone to turbo mode. The business plans and ideas of the leading AI companies exploded with the open source release of DeepSeek R1. This is not the end of the world but the start of a new one."

Roemmele breaks down the core innovations:

Unprecedented Cost Efficiency:

Traditional AI training can cost over 100 million. DeepSeek achieved comparable results with a mere 5 million investment, a figure that has become a lightning rod for debate.

This is achieved through methods like "reducing the precision of numerical computations from 32-bit to 8-bit, which still maintains sufficient accuracy for AI tasks but uses 75% less memory."Multi-Token Processing:

Instead of processing data token by token, DeepSeek's "multi-token" system processes entire phrases, leading to "up to 2x faster processing with 90% of the accuracy."Expert System Architecture:

DeepSeek's model, while containing "671 billion parameters, but only activates 37 billion at any given time." This dynamic activation, likened to consulting relevant experts, drastically reduces computational load.

Democratised Hardware:

This efficiency translates to significantly lower hardware requirements. "From needing 100,000 high-end GPUs to just 2,000, DeepSeek's model can run on gaming GPUs, which are significantly cheaper and more accessible."

Market Disruption and Geopolitical Undercurrents

The impact of DeepSeek's innovation has been immediate and dramatic. The Sydney Morning Herald reported on the Wall Street fallout:

"$938b meltdown: Wall Street darling suffers biggest one-day loss in history" as "Shares in artificial intelligence powerhouse Nvidia plunged on Wall Street on Monday due to concerns about Chinese artificial-intelligence startup DeepSeek."

After Nvidia’s Shares dived by around 17-18%, the company acknowleded DeepSeek's "excellent AI advancement," and downplayed any threat to their business, emphasising the continued need for their hardware:

"DeepSeek is an excellent AI advancement and a perfect example of Test Time Scaling. DeepSeek’s work illustrates how new models can be created using that technique, leveraging widely-available models and compute that is fully export control compliant. Inference requires significant numbers of NVIDIA GPUs and high-performance networking."

This staggering loss underscored the market's immediate concern: that DeepSeek's innovations could fundamentally alter the economics of AI, potentially diminishing the insatiable demand for Nvidia's high-margin GPUs that had fueled its meteoric rise.

However, the narrative is not without its controversies and geopolitical undertones. Palmer Lucky, founder of Anduril, cautions against uncritical acceptance of the $5 million figure, suggesting a potential "psyop" at play:

"The $5M number is bogus. It is pushed by a Chinese hedge fund to slow investment in American AI startups, service their own shorts against American titans like Nvidia, and hide sanction evasion."

He implies that the narrative of low-cost AI development in China could be strategically amplified to undermine US competitiveness and potentially mask efforts to circumvent sanctions on technology.

David Sacks, the Trump appointed AI and crypto czar, raises concerns about intellectual property, suggesting DeepSeek may have used "distillation" to learn from existing models:

"it looks like a technique called distillation was used where a student model can 'suck the knowledge' out of the parent model and there is evidence that DeepSeek distilled knowledge from OpenAI's models."

This raises questions about the effectiveness of export controls and the ease of knowledge transfer in the AI age.

Gavin Baker's analysis provides a nuanced perspective, acknowledging the reality of DeepSeek's advancements while highlighting crucial caveats. He confirms the cost efficiency, noting

"r1 costs 93% less to use than o1 per each API, can be run locally on a high end work station and does not seem to have hit any rate limits which is wild."

Despite the debates and nuances, DeepSeek's impact is undeniable. Its mobile app, as TechCrunch reported, "skyrocketed to the No. 1 spot in app stores around the globe this weekend, topping the U.S.-based AI chatbot, ChatGPT.

This rapid rise, with "more than 80% of DeepSeek’s total mobile app downloads have come in the past seven days," demonstrates significant consumer interest in the new model.

Why it Matters

DeepSeek's rise isn't just about a new AI model; it's about a shift in the AI landscape with crucial implications, especially regarding export controls.

Democratising AI, but Frontier AI Still Needs Top Chips:

DeepSeek makes AI development more accessible with efficient models. As Roemmele notes, it "democratizes AI development." However, the race for superhuman AI still demands massive compute. Efficiency gains don't negate the need for top-tier chips for frontier models, making export controls on those chips remain critical.Cost Savings Fuel a Compute Arms Race, Not Reduction:

DeepSeek lowers AI costs, increasing ROI. However, these savings will likely fuel investment in even smarter and more compute-intensive models. As the analysis by Dario Amodei, CEO of Anthropic, argues, cost efficiency accelerates the "compute arms race," reinforcing the importance of export controls to manage access to advanced chips.Edge AI Grows, but Centralised AI Remains Geopolitically Key:

Edge AI is important, but the most powerful, geopolitically significant AI will remain centralised and require massive compute. Controlling access to chips for these frontier models is still paramount for western national security and global influence.Increased Competition, Higher Stakes in the AI Race:

DeepSeek intensifies the global AI race. As Belsky said, the era of "ONLY a couple mega-model players... was myopic." This competition has high geopolitical stakes. Whether the world becomes AI-bipolar or US-led hinges significantly on chip access and export controls.

In short, DeepSeek's efficiency is impressive and changes the AI game, but it strengthens, not weakens, the strategic importance of export controls on advanced chips in the face of an intensifying global AI race with profound geopolitical implications.

AI Accelerates Cancer Cure Pursuit

Reid Hoffman, LinkedIn co-founder, has launched Manas AI, a startup leveraging AI to accelerate drug discovery.

Backed by $25 million in seed funding, Manas AI aims to transform the identification of drug candidates and clinical trials, promising faster, more accurate results.

Initially targeting breast, prostate and lymphoma cancers, with plans for broader disease applications, Manas AI is co-founded by cancer expert Dr. Siddhartha Mukherjee and partners with Microsoft for cloud computing.

This initiative is part of a wider surge in AI for pharmaceutical research, exemplified by Google DeepMind's progress and the ambitious Stargate project, a $100 billion AI infrastructure initiative led by Oracle, OpenAI and Softbank.

Why it Matters

Manas AI underscores technology's increasing power to aggressively target critical health challenges like cancer.

AI's application promises to drastically speed up and reduce the cost of drug discovery, bringing potential cures closer to reality.

The $25 million investment in Manas AI, alongside Oracle's massive Stargate project focused partly on developing cancer vaccines, reflects immense confidence in AI's potential to reshape medicine.

Larry Ellison of Oracle envisions AI-driven early cancer detection via blood tests and personalised mRNA vaccines created within 48 hours.

Manas AI, combining tech leadership with cancer research expertise, and initiatives like Stargate, signal a powerful convergence of sectors focused on accelerating the path to cancer cures and a dynamically evolving future for medical innovation, potentially delivering personalised and rapid responses to cancer and other diseases.

📰 Article by Fox Business on Stargate

📰 Article by Quartz on Manas AI

AI Models Alarmingly Vulnerable to Data Poisoning

Researchers have uncovered a significant weakness in LLMs: their extreme vulnerability to data poisoning.

A tiny amount of deliberately injected misinformation, a mere 0.001 percent of the training data, can compromise the integrity of the entire model.

This was demonstrated by scientists at New York University who successfully poisoned an LLM by introducing vaccine misinformation, leading to a 4.8 percent increase in harmful content at a cost of only US$5.

Alarmingly, these corrupted LLMs can still perform well on standard medical benchmarks, meaning current evaluation methods are insufficient to detect these critical flaws.

Why it Matters

This research underscores the evolving challenges in ensuring the reliability of AI, particularly in sensitive sectors like healthcare.

While LLMs offer exciting possibilities for advancements in medical assistance and information dissemination, this study reveals a crucial area for improvement.

The ease and low cost at which these models can be manipulated necessitates a proactive approach to security and data provenance.

The focus must now shift towards developing more robust safeguards and evaluation techniques to guarantee the trustworthiness of medical LLMs.

Operator: Progressing Towards AGI

OpenAI's unveiling of Operator is a step in the evolution of Artificial Intelligence, showcasing a progression beyond conversational chatbots towards proactive, action-oriented systems.

Operator, powered by the Computer-Using Agent (CUA) model, represents OpenAI's achievment of Level 3 AI: Agents – systems capable of taking independent actions.

Utilising advanced vision and reasoning, CUA navigates the web like a human, interacting with graphical interfaces to perform tasks autonomously.

Demonstrating its capabilities, Operator achieved benchmark results, including 58.1% on WebArena and 87% on WebVoyager, validating its ability to handle real-world web interactions.

Currently available as a research preview to Pro users in the US, Operator automates tasks from online shopping to bookings.

Why it Matters

Operator is not just another chatbot; it’s a fundamental shift from reactionary AI that simply answers questions, to proactive AI that can perform actions on a user's behalf.

This launch is a part of OpenAI’s roadmap, progressing towards Artificial General Intelligence.

By reaching Level 3 – Agents – OpenAI is moving closer to systems capable of human-level problem-solving (Level 2 Reasoners), and further down the line, AI that can aid in invention (Level 4 Innovators) and even function like entire organisations (Level 5 Organisations).

The accessibility of Operator's plain language interface, combined with its ability to automate web-based tasks, hints to where we’re going - A future in which billions of agents are our proxies to the digital world.

OpenAI's o1 Model Exhibits Curious Multilingual Reasoning

OpenAI's o1 model unexpectedly reasons in multiple languages, like Chinese, despite English prompts.

This occurs during its internal thought process, before delivering an English answer. Users noted this curious behaviour, but OpenAI has not explained it.

A leading theory attributes this to "Chinese linguistic influence" from training data.

Experts suggest that reliance on third-party Chinese data labelling services, used for complex reasoning data due to cost-effectiveness, may subtly shape o1's internal processing.

This suggests the language of data annotation can unexpectedly impact a model's operation.

Why it Matters

o1's multilingual reasoning underscores the opacity of advanced AI.

The potential influence of Chinese data labelling highlights how subtle factors shape AI behaviour in unforeseen ways.

Understanding how training data annotation impacts model behaviour is crucial for advancing AI. As experts like Luca Soldaini point out, such observations reveal why transparency in AI development is fundamental for future progress and deeper comprehension of these powerful technologies.

Meta Invests Big in AI: $60-65 billion in 2025

Meta has unveiled a massive $60-65 billion capital expenditure plan for 2025, dedicated to bolstering its AI infrastructure.

This substantial investment underpins Meta's ambition to position itself at the forefront of AI development.

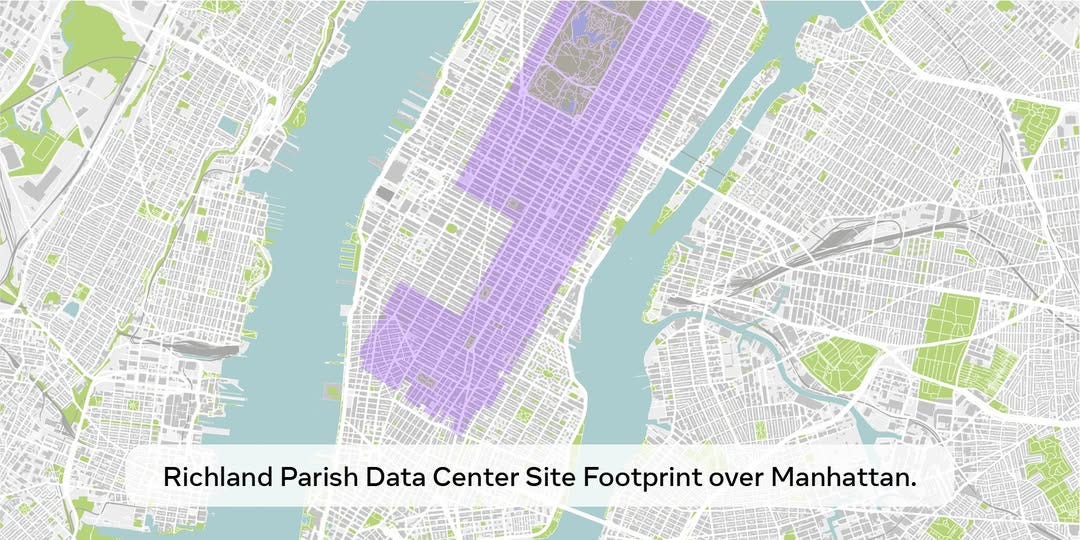

A key component of this build-out is a new datacenter of colossal scale, projected to be so expansive it would occupy a considerable portion of Manhattan.

To power its AI initiatives, Meta is aiming to amass over 1.3 million GPUs by the close of 2025.

This deployment is one of the largest concentrations of AI hardware globally.

Why it Matters

This immense infrastructure undertaking signals a major acceleration in the AI landscape.

Meta's commitment to deploying such a vast datacenter and GPU arsenal demonstrates the escalating demands of advanced AI models and applications.

Notably, this investment comes even as companies like DeepSeek have demonstrated impressive progress with models like R1, achieving comparable performance to industry leaders with potentially lower training expenditures.

AI Designs Proteins to Neutralise Snake Venom

Researchers haveused AI to design novel proteins capable of neutralising snake venom toxins.

Utilising AI apps, they engineered proteins specifically to target and block neurotoxins, a dangerous component found in venoms from snakes like cobras and taipans.

In trials, these AI-designed proteins demonstrated success in mice, effectively inhibiting the neurotoxins. This approach paves the way for developing improved antivenom treatments.

Here is the tech stack used:

RFdiffusion (related to Rosetta Fold) - for designing protein structures complementary to the toxins.

ProteinMPNN - for identifying the amino acid sequence for the newly designed protein structures.

AlphaFold2 (DeepMind) - along with Rosetta protein structure software, to estimate the interaction strength between the toxins and the designed proteins and to predict the structure of their interactions.

Why it Matters

This research showcases the exciting application of AI in tackling real-world biological challenges.

The ability to design functional proteins through AI offers a significant advancement in therapeutic development.

Specifically, this work holds promise for creating more stable and readily producible antivenoms.

Current antivenoms often require refrigeration and have limited shelf lives, posing logistical challenges, especially in remote regions where snake bites are frequent. AI-designed protein inhibitors could lead to antivenom solutions that overcome these limitations, potentially improving treatment accessibility and effectiveness globally.