🤯 AI Just Learned to *THINK* - Are You Smarter?

Microsoft's Copilot just got an upgrade, a new game generation model just dropped and Klarna is making waves again.

Welcome to this week in AI.

We’ve had a HUGE week, OpenAI released its new reasoning o1 model, Microsoft’s Copilot gets upgraded, AI video creation is accelerating and jobs will be disrupted, plus lots more.

Don’t feel like reading? I’ve turned this newsletter into a podcast (8:47).

Highly recommend, it’s a conversation between two synthetic podcast hosts.

This is the 29th edition of This Week in AI and I wanted to check in with you and see what you think, please spend 1 minute in answering these three quick questions.

OpenAI Releases o1: The Next Step Towards AGI

OpenAI has finally released its much-anticipated o1 series of AI models, formerly known as 'Strawberry'.

The o1 models, including o1-preview and o1-mini, leverage reinforcement learning and introduce the concept of "reasoning tokens" to mimic human-like thought processes.

This new approach enables the models to excel in complex reasoning tasks, outperforming their predecessor, GPT-4o, in numerous areas.

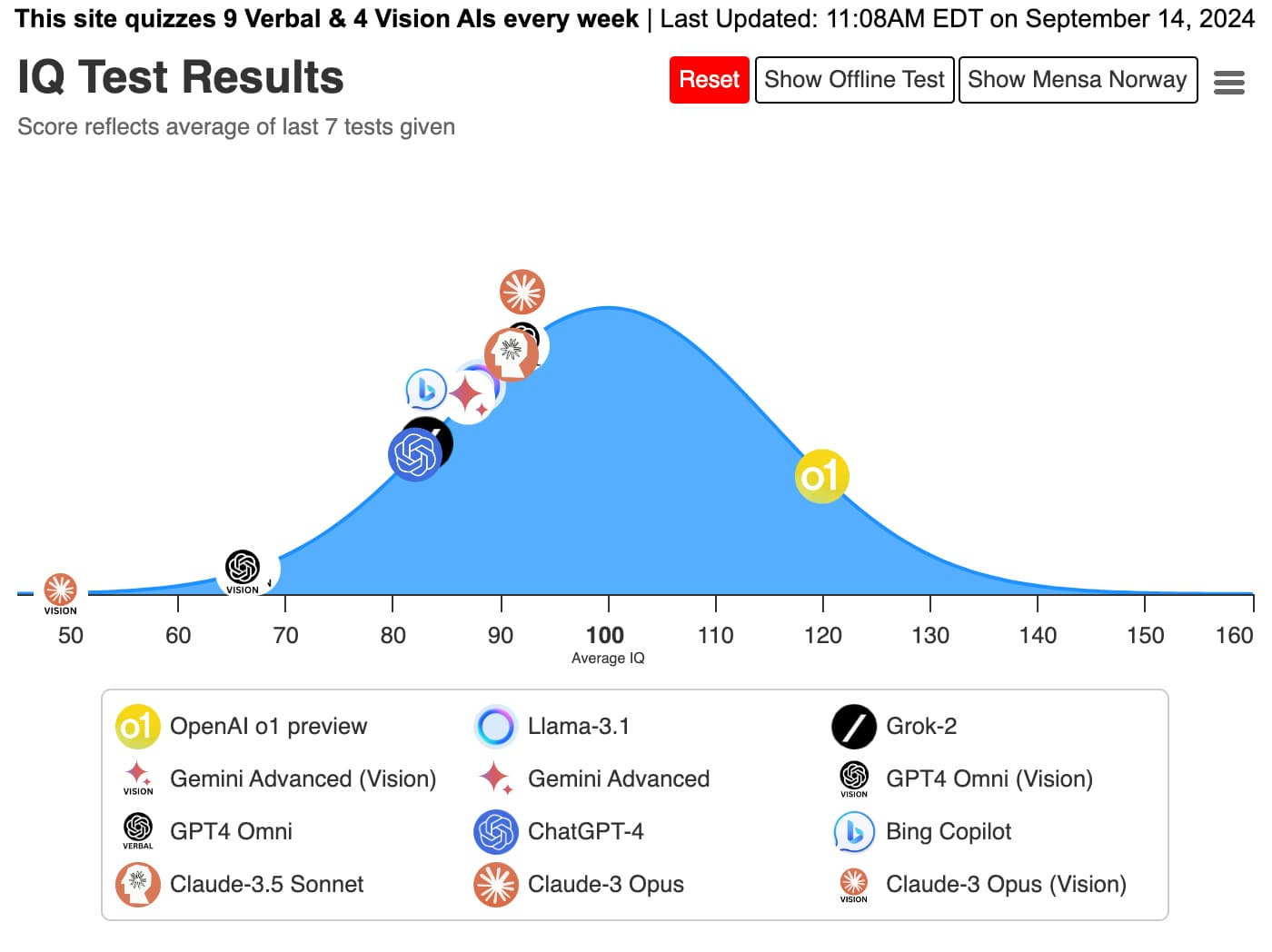

Norway Mensa IQ Test: o1 correctly answered 25 out of 35 questions, scoring an IQ of around 120 which surpasses average human level intelligence.

AIME Math Exam: A competition designed for the brightest high school math students in America, o1 achieved a remarkable 83.3% score, surpassing GPT-4o's 13.4%.

Codeforces: A platform for competitive programming, o1 secured a Elo rating of 1673, outperforming 89% of human competitors.

International Olympiad in Informatics (IOI): An international competition for high school students to showcase their programming skills, o1 ranked in the 49th percentile.

Why it Matters

The release of o1 addresses a key issue with current LLMs, they fail to reason before answering.

This new model architecture utilises the Chain of Thought (CoT), but rather than a prompting technique, it is backed into the model itself.

This means OpenAI has achieved level two in its roadmap to Artificial General Intelligence (AGI), next level is agents.

Some examples of o1’s performance are wild:

PhD Thesis Code in One Hour: 01 generated code for a PhD thesis in just an hour – a task that originally took the researcher a year.

High-Level Architecture: Developers are using o1 for high-level application architecture design, then switching to faster models for code generation; in some cases creating apps in less than 10 minutes.

These models are very impressive, however they do have limitations, primarily the speed and cost of running them. The latency between prompt and response makes it less responsive.

For agents, this latency isn’t so much of an issue because agentic workflows tend to be asynchronous or less conversational in nature.

You can access o1-preview and o1-mini right now in ChatGPT if you have a Plus subscription, OpenAI also describes how best to use the models here.

Microsoft 365 Copilot Wave 2: Key Enhancements and Features

Microsoft has released the second major update to its AI assistant, Copilot.

This release brings several key improvements designed to boost productivity and streamline workflows across the Microsoft 365 suite.

Copilot responses are now over twice as fast on average and user satisfaction scores have increased nearly threefold.

New Features

Copilot Pages: A new collaborative workspace that integrates AI capabilities, allowing for dynamic and persistent content creation with real-time teamwork.

Expanded App Integration: Copilot now offers deeper integration with Excel, PowerPoint, Teams, Outlook, Word, and OneDrive.

Copilot Agents: These AI assistants automate and execute business processes, working alongside or on behalf of users. Agent builder simplifies the creation of custom agents.

Why it Matters

For daily Microsoft 365 users, these updates could improve efficiency and focus.

The new features will streamline workflows by eliminating the need to switch between apps for tasks like data analysis, email management, or presentation creation.

While the full impact remains to be seen, these enhancements further embed AI into the Microsoft 365 ecosystem and promise a more seamless and productive experience.

📝 Information and videos on Wave 2

Tencent's GameGen-O: AI-Powered Open-World Game Generation

Watch the generated gameplay (highly recommend)

Tencent has introduced GameGen-O, an AI model that has the capability to generate open-world video games.

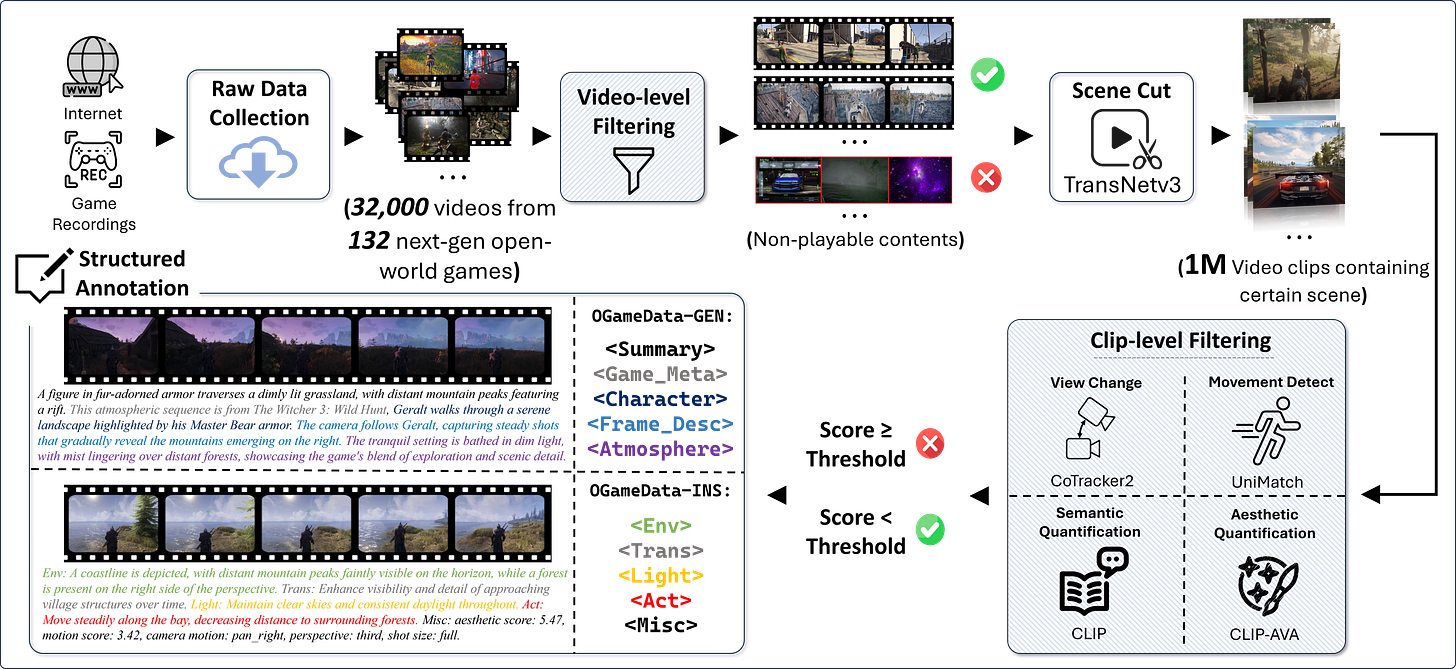

This innovative model uses a two-stage training process, leveraging a massive dataset:

Collected from 132 open-world games.

32,000 videos of gameplay.

Clipped into 1 million clips of certain scenes.

It can simulate a wide range of game features, including characters, dynamic environments, and complex actions.

The generated games are interactive and open up a new world of possibilities for game development.

Why it Matters

GameGen-O represents another step forward in the ongoing development of generative gaming, building on the recent release of a generated version of Doom.

By utilising generative models as an alternative to traditional rendering techniques, it could streamline the game creation process, allowing for faster and more efficient content generation.

The model's ability to generate high-quality, open-domain content with interactive elements will lead to more immersive and personalised gaming experiences.

This advancement signals a shift in which AI will play an increasingly significant role in video game development, a 200 billion dollar industry.

Klarna's AI Pivot: Redefining Enterprise Software and Challenging SaaS Dominance

Klarna's recent decision to replace established SaaS platforms like Salesforce and Workday with an in-house AI solution is making waves in the enterprise software industry.

By leveraging AI, Klarna aims to achieve greater efficiency, and cost savings compared to traditional SaaS offerings.

This move challenges the long-held notion that deeply integrated SaaS platforms are irreplaceable and the cost of switching is so great that it’s not feasible to do so.

Interestingly, this isn't Klarna's first foray into AI disruption. The company previously replaced around 700 customer facing staff with an AI assistant, resulting in reported cost savings and an improved customer experience.

Why it matters

Klarna's move signals a potential shift in how large enterprises approach their software needs.

With AI enabling the creation of custom solutions that can rival or even outperform established SaaS platforms, CIOs and IT leaders may reconsider their reliance on external vendors.

The prospect of substantial cost savings, particularly with AI handling the heavy lifting, coupled with the ability to tailor solutions to specific needs, could make in-house AI development an attractive alternative.

However, it's critical to remember that while licensing fees might decrease, the real cost lies in the ongoing maintenance and upkeep of running your own infrastructure.

The Future of Video Creation and Its Implications for the Job Market

Video generation is experiencing a surge in activity, with Runway and Luma Labs both releasing APIs that make their advanced video generation models accessible to developers.

Additionally, Runway has also launched its Gen-3 Alpha Video-to-Video feature, enabling users to modify existing video content using text prompts.

This tool offers capabilities like text-to-video transformation, style transfer, weather and lighting adjustments, and object manipulation.

Why it Matters

Developers can now seamlessly integrate state-of-the-art video generation capabilities into their applications, opening doors for new tools and content creation.

This has the potential to disrupt various industries, from marketing and advertising to education and entertainment.

These new models and workflows have the potential to impact the job market, particularly in the film and TV industry.

A study indicates that 75% of film production companies that have adopted AI have already reduced, consolidated, or eliminated jobs.

Moreover, it is predicted that by 2026, over 100,000 U.S. entertainment jobs will be disrupted by generative AI.

While the future of video generation is incredibly exciting, it presents a challenge to those whose livelihoods may be affected.

📝 Generate a video with Luma Labs (free)

📝 Generate a video with Runway (free)

'Hallucinations' of AI: When Language Models Get Confused

A research paper that dives into the limitations of LLMs, particularly their tendency to 'hallucinate' is trending.

It argues that these hallucinations aren't just occasional errors but are fundamentally built into how LLMs work.

The paper shows that hallucinations are unavoidable, even with the best training data or the most advanced models.

It concludes that we need to accept these limitations and find ways to manage them, rather than trying to eliminate them completely.

Why it Matters

This research is a reality check for the growing excitement around LLMs. It highlights that even as LLMs get better, they will still sometimes make things up.

This is important for both the people who build these models and the people who use them.

Developers need to create systems that are open about their limitations and can catch potential errors.

Users need to be aware that even the most advanced LLMs can produce misleading information and should be used with a degree of scepticism.

Understanding these limitations is key to using LLMs responsibly in fields like healthcare and education where the accuracy of the model could cause harmful.

That’s a Wrap!

If you want to chat about what I wrote, you can reach me through LinkedIn.

If you liked it, I’d appreciate a share!