AI in War 🚨 Open-Source Just Won 👑 GPT-4o Mini: A New Powerhouse 🤖

Your weekly dose of AI insights

Welcome to this week in AI.

This week, we look at how Meta’s Llama 3.1 release will change the game, AI in war is becoming far more prevalent and lastly macro influences on AI including new UN policy proposals.

Let’s get into it!

👋 If you’re new here, welcome!

Subscribe to get your AI insights every Thursday.

Meta's Llama 3.1: A New Era of Open-Source AI Dominance

For the first time, an open-source model is near parity with the frontier proprietary models, this is a huge development.

Meta's release of Llama 3.1 is part of their "scorched earth" AI strategy. By democratising the foundational models, it means:

Significantly reduced reliance on expensive closed sourced models (think OpenAI’s GPT models) - This in turn forces a race to the bottom for pricing.

Complete control of the Llama model, which can be fine-tuned for specific use cases, giving developers more control.

Meta's release of these models isn't solely about the technology itself. It's about establishing an ecosystem. This ecosystem, with its shared tools and standards, will become a magnet for developers. Their collective work, steered by Meta's influence, will ultimately determine the direction of AI innovation.

The Llama 3.1 model boasts an enormous 405 billion parameters and is trained on 15 trillion tokens on over 16 thousand H100 GPUs, making it the largest open-source model ever released.

Mark Zuckerberg, Meta's CEO, envisions a future where open-source AI becomes the industry standard, much like Linux revolutionised operating systems.

In his words, "I think Llama has the opportunity to become the open-source AI standard for open-source to become the standard the industry standard for for AI."

Astoundingly, the day after the Llama 3.1 release, Mistral’s Large 2 was dropped - It’s another model that compares to the frontier models, even surpassing Llama 3.1 on some bench marks like coding and math with around half the parameters.

In response to the Llama 3.1 release, OpenAI is allowing users to fine-tune the GPT-o model with up to 2M training tokens a day for the next two months for free.

Why This Matters

Meta's release of Llama 3.1 and their broader AI strategy have significant implications for the future of artificial intelligence.

The open-source nature of Llama 3.1 democratises AI, making it accessible to a broader range of developers and researchers. This can lead to accelerated innovation and a more diverse range of AI applications.

Furthermore, Meta's aggressive push for open-source AI is challenging the dominance of closed models and proprietary platforms.

By offering a powerful, accessible, and cost-effective alternative, Meta is reshaping the AI landscape and setting a new standard for collaboration and openness in the field.

As Zuckerberg puts it, "Open source AI represents the world's best shot at harnessing this technology to create the greatest economic opportunity and security for everyone."

With Meta projecting its Llama-based AI assistant to surpass ChatGPT's usage by the end of the year, Llama 3.1 is poised to significantly impact the AI industry and the broader technological landscape.

Zuckerberg explains his thinking in this video

The Rise of AI in Warfare: A Double-Edged Sword

The use of AI in warfare is rapidly expanding, with Ukraine at the forefront of this technological advancement.

Ukrainian startups are developing AI systems to control drones, enabling them to overcome signal jamming, operate in swarms, and independently identify and strike targets.

This development is seen as crucial for Ukraine to counter Russia's increasing electronic warfare capabilities.

One such company, Swarmer, is creating software to network drones, allowing for decentralised decision-making and coordinated attacks. While still in development, this technology promises to enhance the effectiveness and scale of drone operations.

AI is already being used in some of Ukraine's long-range strikes, with swarms of up to 20 drones targeting military facilities deep inside Russia.

The increasing use of AI in warfare, however, is not without its challenges. It raises ethical concerns about autonomous weapons and the potential for machines to make life-or-death decisions without human intervention.

Why This Matters

The integration of AI into warfare represents a significant shift in military technology. It has the potential to revolutionise how wars are fought, with drones and other autonomous weapons playing an increasingly prominent role.

Autonomous weapons are not the only use of AI, the Israel Defense Force used an AI-enabled targeting system to label as many as 37,000 Palestinians as suspected militants during the first weeks of its war in Gaza.

While this technology could offer tactical advantages and potentially reduce human casualties as well as the cost of war, it also raises profound ethical and legal questions.

The development of AI-powered weapons systems is a global phenomenon, with countries like the U.S., Russia, and China heavily investing in this area.

Recently, former president Trump’s ally’s are reportedly drafting an AI focused executive order, described as the “AI Manhattan Project”, it is aimed at boosting military AI development and rolling back current regulations.

An AI arms race is on and the need for international regulations to govern the use of autonomous weapons is paramount.

📰 Article on drones in Ukraine by Routers

📝 Article on the evolution of autonomous weapons by The Guardian

Nvidia Adapts to U.S. Export Controls with New AI Chip for China

Nvidia is developing a modified version of its flagship AI chip, the Blackwell B20, specifically for the Chinese market.

This strategic move aims to maintain Nvidia's presence in China while complying with tightened U.S. export controls on advanced semiconductors.

The B20, a less powerful version of the Blackwell B200, is set to launch in partnership with Inspur, a major Chinese distributor, in the second quarter of 2025.

This development comes as Nvidia's existing high-performance chip for China, the H20, has seen a surge in sales, with projected revenue exceeding $12 billion this year.

The introduction of the B20 chip underscores Nvidia's commitment to the Chinese market, even amidst growing competition from local players like Huawei and Enflame, who have benefited from the export restrictions.

Why This Matters

By complying with export regulations, the chip allows Nvidia to retain market share in China and offer advanced AI technology to Chinese customers.

The B20 chip reflects the ongoing technological rivalry between the U.S. and China, and the increasing complexity of navigating global trade regulations in the AI sector.

Finally, the success of the B20 chip could significantly impact Nvidia's revenue and the broader AI chip market, demonstrating the growing demand for AI technology in China and globally.

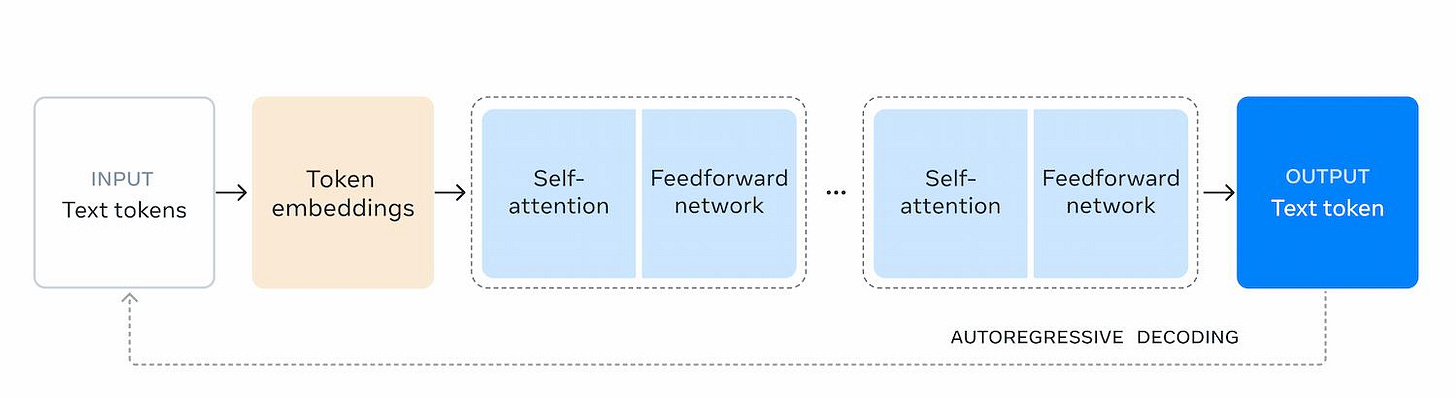

GPT-4o Mini: Faster, Cheaper, and Smarter

OpenAI has introduced GPT-4o mini, a smaller and more cost-efficient AI model designed to make artificial intelligence accessible to a broader audience.

As OpenAI's Head of Product API, Olivier Godement stated, "For every corner of the world to be empowered by AI, we need to make the models much more affordable. I think GPT-4o mini is a really big step forward in that direction."

Priced at 15 cents per million input tokens and 60 cents per million output tokens, GPT-4o mini is considerably more affordable than its predecessors, including GPT-3.5 Turbo, which it outperforms by over 60%.

The model has significant speed improvements, processing text more than twice as fast as GPT-4o and GPT-3.5 Turbo.

Why This Matters

For businesses, GPT-4o mini's affordability could lead to significant cost savings compared to using larger, more expensive models. This could encourage wider adoption of AI technologies.

For consumers, the integration of GPT-4o mini into ChatGPT could lead to a more seamless and responsive user experience.

Overall, GPT-4o mini represents a significant advancement in making models smaller and more powerful, this reduces costs and the amount of compute needed to run them, eventually we’ll run models like GPT-4o mini on our personal devices.

📝 Interesting post on GPT-4o mini's effect on advertisement

Is Regulation Stifling AI Innovation?

The United Nations (UN) is proposing a unified global forum for AI governance to address the growing concerns and opportunities surrounding advanced AI technologies.

This initiative aims to bring together diverse stakeholders, including governments, industry leaders, and civil society, to establish common standards and guidelines for AI development and use.

However, the proposal has been met with criticism from G7 countries and EU officials, who argue that it undermines existing efforts like the G7's "Hiroshima AI Process" and the EU's Artificial Intelligence Act.

They also express concerns that the UN's initiative may inadvertently advance China's agenda in the AI landscape.

Meanwhile, Meta's decision to withhold the release of its Llama 3.1 model in the EU highlights the challenges companies face in navigating the complex and evolving regulatory landscape.

Meta cited the "unpredictable" regulatory environment as the reason for their decision. This follows a similar move by Apple, which decided not to roll out some of its new AI features in the EU due to similar concerns.

Why This Matters

The increasing regulation and overlapping regulations, especially in regions like the EU, are posing significant challenges to AI innovation.

Companies like Meta and Apple are hesitant to release new AI products and features in the EU due to concerns about regulatory compliance.

While the UN's proposal aims to address the need for global cooperation in AI governance, it also raises questions about the effectiveness of creating another forum in an already crowded landscape of AI initiatives.

The proliferation of such initiatives could lead to further fragmentation and complexity, hindering rather than facilitating AI development.

📰 Article by The Guardian around the Llama 3.1 EU decision

Natural Gas: A Controversial Fuel Powering the AI Boom

The AI industry is positioned for significant growth, with a projected increase of 290 terawatt hours in electricity demand in the U.S. by the end of the decade, equivalent to the total power consumption of Turkey.

This surge is driven mainly by the expansion of data centres, which are essential for AI development and operations.

Alan Armstrong, CEO of Williams Companies, a major U.S. pipeline operator, argues that natural gas is crucial for meeting this escalating demand and ensuring that the U.S. remains a leader in AI.

He sees the issue as a national security concern and warns that failing to embrace natural gas could hinder the country's progress in this critical field.

Industry leaders are echoing Armstrong's sentiment, with major independent data centre developers directly approaching Williams Companies for natural gas supply.

Why This Matters

The growth of AI presents both opportunities and challenges. While AI promises significant advancements in various fields, its increasing energy demands raise concerns about environmental impact, particularly greenhouse gas emissions from natural gas.

The debate over the role of natural gas in powering AI is complex. While it offers a reliable and readily available energy source, its environmental implications cannot be ignored.

The AI industry's reliance on natural gas could exacerbate greenhouse gas emissions and hinder the transition to a cleaner energy future.

That’s a Wrap!

If you want to chat about what I wrote, you can reach me through LinkedIn.

Or give my editor a bell through his LinkedIn here.

If you liked it, give it a share!

parody or parity :) ?