3 Major AI Model Launches: Grok-3, OpenAI & Anthropic 🧠 Scaling Laws Hold 🚀 Meta Enters Robotics 🦾

PLUS: The first real copyright challenge to training data & former OpenAI CTO launches challenger

👋 This week in AI

🎵 Podcast

Don’t feel like reading? Listen to two synthetic podcast hosts talk about it instead.

📰 Latest news

xAI's Grok-3: Reaffirming AI Scaling Laws

xAI's release of Grok-3, its latest AI model, not only showcases performance improvements but also provides a strong validation of the scaling laws in AI development.

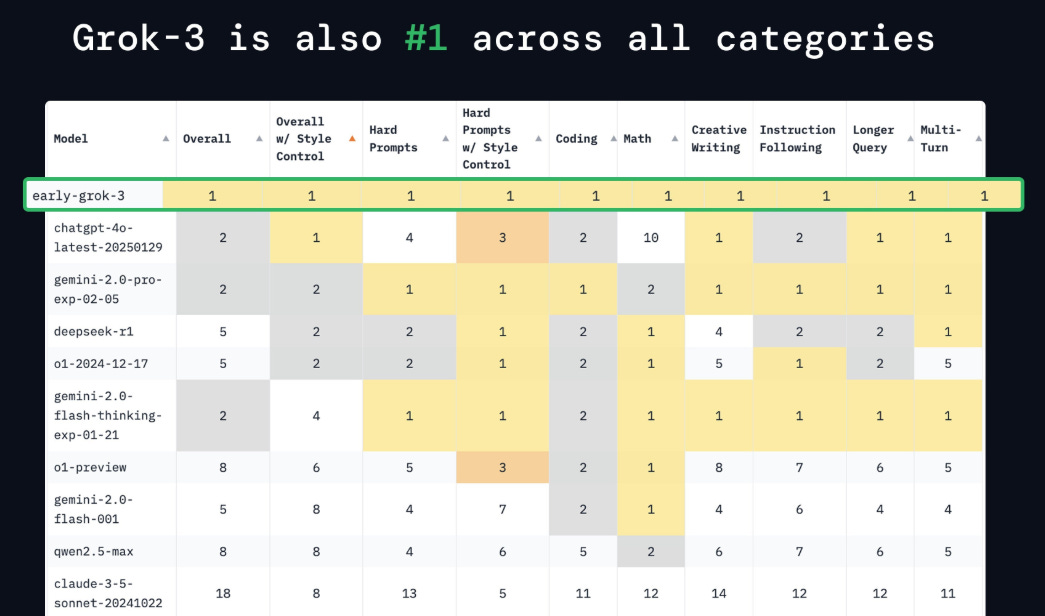

Grok-3 demonstrates strong results across various benchmarks, including mathematics, graduate-level science, and coding, where it outperforms established models like GPT-4o, Claude 3.5 Sonnet, and Gemini-2 Pro. It also achieved a top score on Chatbot Arena (exceeding 1400).

These achievements make grok, at minimum, State of the Art.

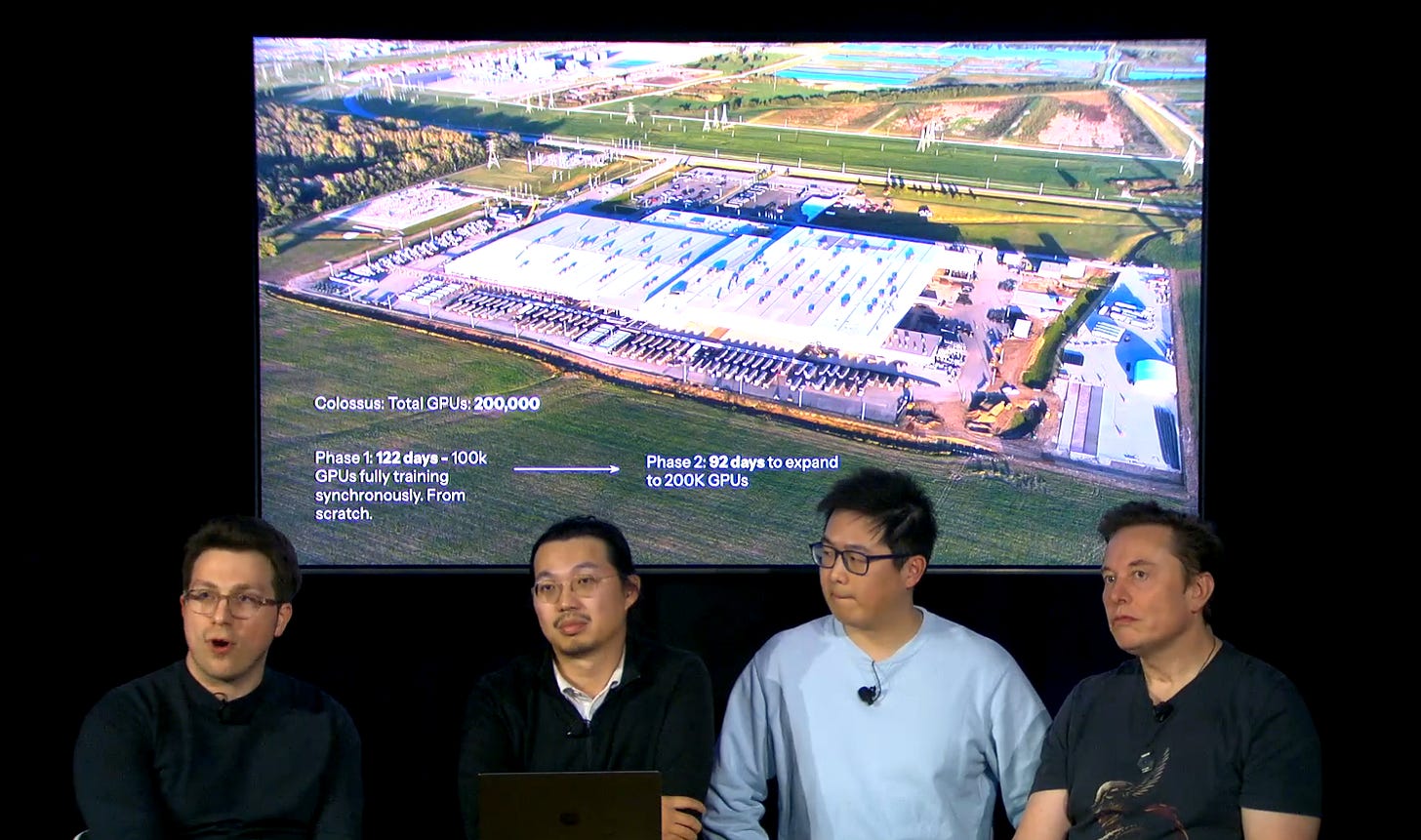

Crucially, Grok-3's development leveraged a 200,000 NVIDIA H100 GPU cluster, representing a tenfold increase in compute resources compared to its predecessor.

This substantial investment in computational power aligns with the "Bitter Lesson" in AI, which posits that scaling learning algorithms with more compute is generally more effective than relying solely on hand-crafted features or algorithmic optimisations.

Grok-3 introduces "Think Mode" and "Big Brain Mode" to enhance reasoning, enabling multi-step logical processing for improved accuracy.

It also features DeepSearch, an internet information retrieval and summarisation tool, comparable to offerings from OpenAI and Perplexity AI, though some aspects of DeepSearch remain in beta. A smaller variant, Grok-3 mini, prioritises speed over reasoning depth.

Why It Matters

Grok-3's success is a key moment, representing perhaps the clearest validation of the scaling laws since GPT-4.

It underscores that significant advancements in AI capabilities can be achieved by substantially increasing computational resources. While algorithmic improvements and optimisations (like those DeepSeek, and any AI lab, use) are valuable, Grok-3 demonstrates that raw computational power remains a primary driver of progress.

xAI's approach contrasts with companies like DeepSeek, which achieved notable results with fewer resources through intense optimisation, but faced limitations due to export controls on advanced GPUs.

It's also notable that xAI is a relatively recent entrant into the AI field, having been founded just over a year ago. Grok-3 represents a rapid ascent, allowing xAI to quickly catch up to the performance levels of more established players.

However, the competitive landscape remains dynamic. OpenAI and Anthropic are expected to release their next-generation flagship models soon, potentially shifting the balance of power once again.

The Grok-3 is currently available to X premium Plus subscribers.

𝕏 Technical review by Andrej Karpathy (OpenAI founder)

𝕏 Watch the live stream of Elon and team launch Grok 3

OpenAI Announces GPT-4.5 ("Orion") Release in Weeks, GPT-5 to Follow

OpenAI CEO Sam Altman has announced a revised roadmap for the company's upcoming AI models, focusing on simplification and integration.

The immediate next step is the release of GPT-4.5, codenamed "Orion," described as the final "non-chain-of-thought" model from OpenAI. This model is slated for release in the coming "weeks."

Following GPT-4.5, the focus shifts to GPT-5, envisioned as a unified system that integrates various OpenAI technologies, including the previously anticipated "o3" model, which will no longer be released separately.

This integration aims to eliminate the need for users to choose between different models, providing a streamlined experience where the system automatically selects the best tools and capabilities for a given task.

GPT-5 will be offered through a tiered access system:

Free users will have unlimited access to GPT-5 at a "standard intelligence" setting.

Paid tiers, Plus and Pro, will unlock progressively higher levels of intelligence and access to advanced tools.

These tools include voice interaction, canvas capabilities, search functionality, and deep research features.

Deep Research will be available even on free plans (2 queries per month), with an increased 10 queries per month for Plus users.

The limit for o3-mini-high is also being increased to 50 messages per day.

The timeline for GPT-5's release is estimated to be "months."

Why It Matters

OpenAI's updated roadmap represents a move towards a more user-friendly approach to AI. By consolidating its models and tools into a single, integrated system (GPT-5), the company aims to simplify the user experience.

This change suggests a potential shift in how AI capabilities are packaged and delivered, moving away from a fragmented model landscape towards a more cohesive and accessible system, automatically adapting to a wide range of tasks.

Anthropic Catching up with Next Model in "Weeks"

Anthropic is preparing to launch a new AI model "within weeks."

This upcoming model is described as a "hybrid," capable of functioning both as a standard language model (LLM) and a deep reasoning engine. This dual capability allows it to adapt to different use cases on demand.

A key feature of the model is a "sliding scale" system. This system gives developers precise control over the amount of reasoning power allocated to each query, allowing them to balance performance with computational cost.

At its maximum reasoning setting, the model reportedly excels in real-world programming tasks and can effectively analyse large codebases. According to reports, it outperforms OpenAI's o3-mini-high "reasoning" model in some programming benchmarks.

Anthropic CEO Dario Amodei recently hinted at this new approach, expressing a focus on creating reasoning models that are "better differentiated" and questioning the traditional separation between "normal" and "reasoning" models.

This release follows a period of relative quiet from Anthropic since the launch of Sonnet 3.5.

Why It Matters

Anthropic's new model, with its hybrid approach and developer-focused controls, could offer greater flexibility and efficiency for various applications, particularly in software development.

The ability to adjust reasoning power on demand could be valuable for tasks requiring different levels of computational intensity.

The imminent release positions Anthropic to directly compete with recent and upcoming releases from other major AI players.

The rapid pace of development – with xAI quickly catching up to established players and OpenAI streamlining its model offerings – suggests that Anthropic's release is, at least in part, a response to maintain its competitive edge.

The pressure to deliver cutting-edge capabilities is intense, and Anthropic's hybrid model appears to be their strategic move to remain at the forefront of AI innovation.

📰 Article by Tech Crunch on the release

Former OpenAI CTO Mira Murati Launches Competitor: Thinking Machines Lab

Mira Murati, previously the Chief Technology Officer at OpenAI, has officially launched Thinking Machines Lab, a new artificial intelligence research and product company.

The lab's stated mission is to make AI systems "more widely understood, customisable, and generally capable." A core tenet of this mission is a commitment to open science. Thinking Machines Lab plans to regularly publish technical papers, code, datasets, and model specifications.

The company boasts a team of prominent AI researchers and engineers, including former OpenAI colleagues John Schulman (Chief Scientist) and Barret Zoph (CTO), as well as experts from DeepMind, Character AI, and Mistral. Many were involved in developing some of the most used AI products and models, like ChatGPT, Character.ai, Pytorch and Mistral.

The lab's research will focus on areas such as science, programming, human-AI collaboration, and multimodality. This launch comes six months after Murati's departure from OpenAI.

Why It Matters

The formation of Thinking Machines Lab, led by a prominent figure from OpenAI, adds another player to the increasingly competitive AI research landscape.

The lab's emphasis on open science could have a influence on how AI research is conducted and shared, potentially fostering greater collaboration and transparency within the field. '

This follows a trend of former OpenAI leaders founding rival labs; Ilya Sutskever's SSI is also reportedly in the process of raising substantial funding.

The focus on customisation, combined with the team's expertise, suggests that Thinking Machines Lab aims to develop AI systems that are not only powerful but also adaptable to a wide range of user needs.

Meta Enters Humanoid Robotics Race with New Reality Labs Division

Meta Platforms is making a foray into the humanoid robotics field, establishing a new division within its Reality Labs unit dedicated to developing AI-powered robots for consumer use.

This new group will focus on research and development of "consumer humanoid robots," leveraging Meta's Llama AI foundation models.

This move places Meta in direct competition with other tech companies actively pursuing humanoid robotics, including Tesla, Nvidia-backed Figure AI, and Apptronik

The initiative builds upon Meta's long-standing research into "embodied AI," aiming to create AI assistants capable of interacting with and navigating the physical world.

The Reality Labs unit lost approximately $5 billion in the fourth quarter of last year.

Why It Matters

Meta's entry into humanoid robotics represents another step into bringing AI into the physical world. While progress in robotics has been slower compared to language-based AI, the emergence of more advanced AI models is driving renewed interest and investment in this area.

Meta's focus on consumer applications, as opposed to industrial or manufacturing uses, is relatively unexplored. The initiative is a bet on the long-term potential of humanoid robots, despite the current financial losses of Reality Labs.

Fiverr Launches Fiverr Go: The Future of Gig Work?

Fiverr, the freelance service platform, has introduced Fiverr Go, a new suite of AI-powered tools designed to help freelancers integrate AI into their workflow.

The core offering includes AI Creation Models, which allow freelancers to train AI models on their unique style and then sell AI-generated content, for a cost of $25 per month. Freelancers retain ownership rights to their style and the generated output.

Another key component is the Personal AI Assistant, available for $29 per month. This tool helps manage client communications, handle routine tasks, and provide customised responses based on past interactions.

Initial access to Fiverr Go is limited to "thousands" of vetted freelancers at Level 2 and above, specifically in categories such as voiceover, design, and copywriting.

Fiverr is also launching an equity program that will grant shares in the company to top-performing freelancers, although specific details about this program have not yet been released.

Why It Matters

Fiverr Go signals a potential shift in gig work, where freelancers become managers and trainers of AI, rather than solely creators. This could increase productivity and earnings for some, but also raises questions about the changing nature of creative work and potential inequalities.

The equity program suggests that platforms may increasingly rely on the combined output of humans and AI. Fiverr Go is an experiment that will shape the future of the gig economy.

First AI Training Copyright Case: Judge Rules Against ROSS in Suit by Thomson Reuters

In a case, Thomson Reuters v. ROSS (1:20-cv-00613-SB), Judge Stephanos Bibas delivered the first district court ruling addressing copyright infringement and fair use in the context of training AI models.

The judge granted summary judgment in favour of Thomson Reuters, finding that ROSS's use of Westlaw's headnotes to train its AI model did not constitute fair use.

This decision came after Judge Bibas, in a surprising turn, invited the parties to renew their summary judgment motions nearly a year after initially denying them, and subsequently reversed his earlier position.

The core of the ruling hinged on the fact that ROSS was developing a competing legal research tool to Westlaw, and therefore, the copying of Westlaw's headnotes was deemed not transformative.

The court found that ROSS infringed 2,243 of the 2,830 headnotes. The court also rejected ROSS's "intermediate copying" defence, which has been successful in some software cases (like Sega, Sony, and Google v. Oracle).

The distinction was made because the copied material in this instance was editorial content (written words) rather than functional computer code, and the court determined the copying was not necessary to achieve ROSS's new purpose. The ruling, delivered on February 11, specifically addressed non-generative AI.

Why It Matters

This landmark ruling sets an early precedent for AI training copyright cases, though it's limited to non-generative AI.

The decision raises concerns about the fair use defence for AI developers using copyrighted material, especially when creating competing products.

The rejection of the "intermediate copying" defence in this context is also noteworthy. This case, while not definitive for generative AI, shapes the future legal landscape for AI and copyright.